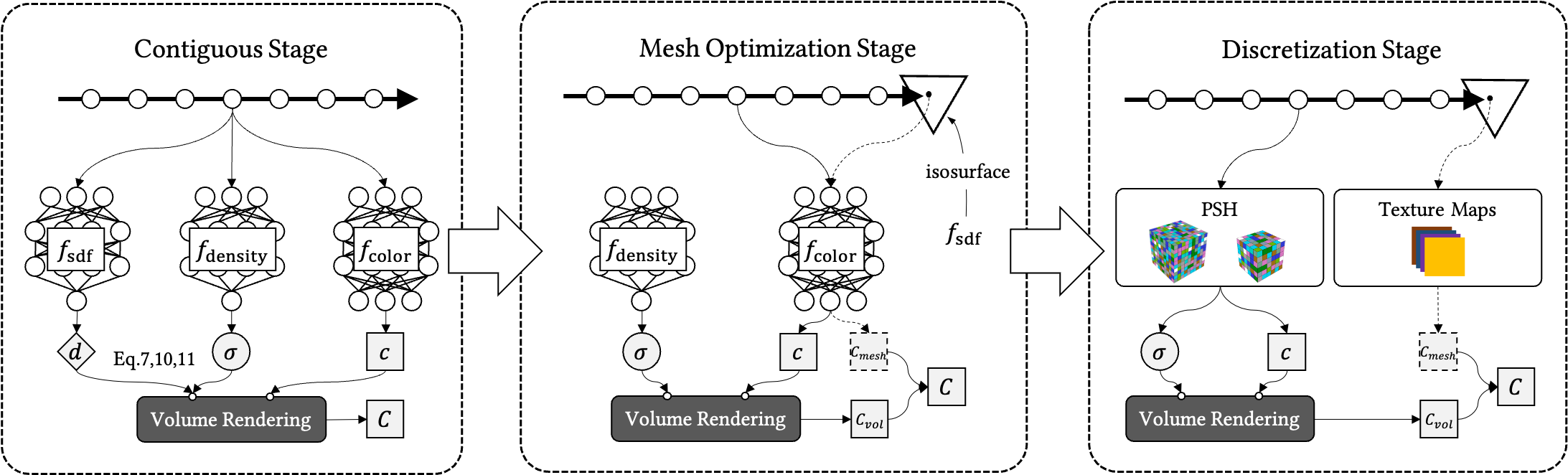

Pipeline

We propose to obtain such a representation from multi-view images of an object in three stages. To start with, we train a contiguous form of the representation, where the surface part is modeled by a neural signed distance field, and the volume part is modeled by a neural density field (left). Then we fix the learned signed distance field and extract a triangular mesh from it as a substitution to be rendered jointly with the neural density field (middle). We utilize differentiable isosurface and rasterization techniques to get high-quality meshes that align well with the implicit geometry. Lastly, we drop all the neural networks and perform discretization to get the final assets for efficient storage and rendering (right). Concretely, the triangular mesh is simplified and UV-parametrized, and the neural density field is first voxelized and pruned to a sparse volume, which is then organized by perfect spatial hashing to support fast indexing and compact storage.